Working with AI is always fun. In this guide we will be using Tensorflow's tflite library to run on NUCLEO-WB55RG development board. The Tflite doesn't support the STM32 chipset in this Nucleo board out of the box. In this blog I will describe how I ported the tflite to run on this Nucleo board. The procedure can be adapted to another chipset if required with very few tweeks. The NUCLEO-WB55RG is chosen because of its large memory footprint, M4 Arm Cortex performance with FPU support.

TensorFlow Lite (TFLite) is a lightweight version of the TensorFlow library, specifically designed for deployment on mobile and embedded devices. It allows developers to run machine learning models on devices with limited computational resources and memory.

Some of the key features of TFLite include:

- Small footprint: TFLite is optimized for devices with limited resources, and its binary size is smaller compared to the full TensorFlow library.

- Hardware acceleration: TFLite supports a wide range of hardware accelerators, including GPUs, DSPs, and NPUs (Neural Processing Units), to improve the performance of machine learning models on devices.

- Interpreter: TFLite uses an interpreter to execute machine learning models, which allows for flexibility in terms of the devices and platforms it can run on.

- Cross-platform compatibility: TFLite can run on a variety of platforms, including Android, iOS, Linux, and microcontrollers, making it easy to deploy machine learning models on a wide range of devices.

- Model conversion: TFLite provides tools for converting pre-trained TensorFlow models to TFLite models, which can be used on mobile and embedded devices.

- Support for a wide range of models: TFLite supports a wide range of machine learning models, including image classification, object detection, and text-to-speech, among others.

- Automatic quantization: TFLite can automatically quantize the model to 8-bit fixed-point precision, which reduces the memory footprint and computation time of the model, making it more efficient for deployment on resource-constrained devices.

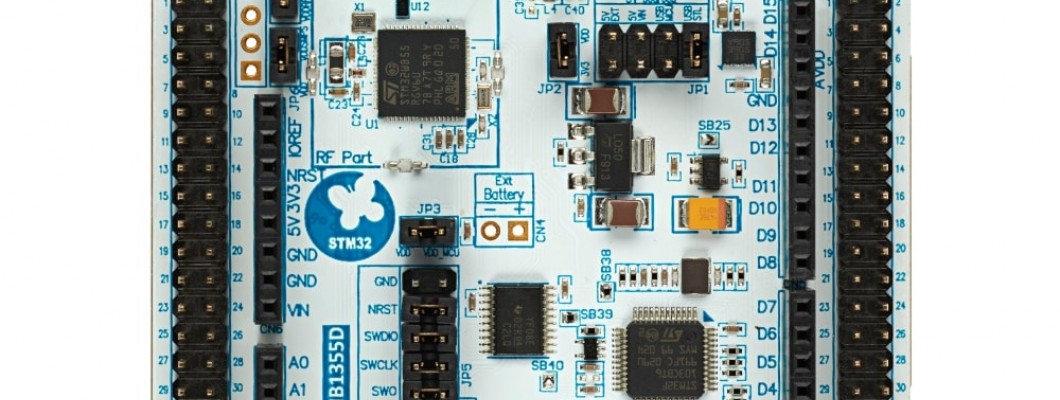

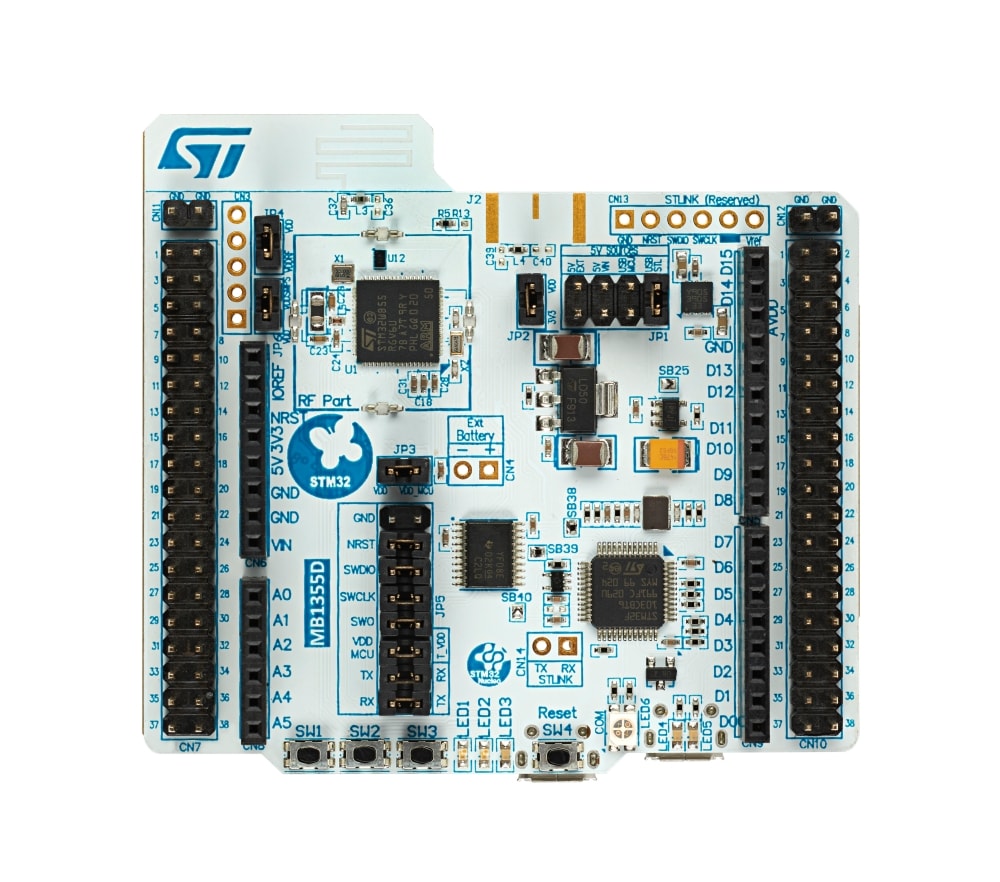

The NUCLEO-WB55RG is a development board for the STM32WB55 microcontroller from STMicroelectronics. It is part of the STM32 Nucleo development board series, which provides a flexible and cost-effective way for developers to evaluate and prototype with the STM32WB55 microcontroller.

Some of the key features of the NUCLEO-WB55RG include:

- On-board STM32WB55 microcontroller: The development board is equipped with a STM32WB55 microcontroller, which has a Cortex-M4 main core and a Cortex-M0+ co-processor, and built-in support for Bluetooth 5 and 802.15.4 wireless standards.

- Expansion connectors: The board has a variety of expansion connectors, including a Arduino Uno V3 connector and a morpho connector, which allows for easy connection to various external devices and sensors.

- On-board debugging: The NUCLEO-WB55RG has an on-board ST-LINK/V2-1 debugger, which provides a convenient way to debug and program the microcontroller.

- Power options: The board can be powered through the USB connector or an external source, and has a voltage regulator that allows for input voltage between 7V to 12V.

- Software support: The NUCLEO-WB55RG is supported by the STM32CubeWB software package, which provides a wide range of software examples, libraries, and tools for developing with the STM32WB55 microcontroller.

- Compatibility: The board is also compatible with a wide range of development environments, including IAR, Keil, and GCC.

- Physical form factor: The board has a compact form factor of 69.9 x 53.3mm, it is easy to handle and take it along with you in your development journey.

The STM32WB55 microcontroller is a member of the STM32WB5x family from STMicroelectronics. Some of its key features include:

- Dual core architecture: The STM32WB55 features a Cortex-M4 main core and a Cortex-M0+ co-processor, which allows for efficient processing of both real-time and network-related tasks.

- Wireless connectivity: The microcontroller has built-in support for Bluetooth 5 and 802.15.4 wireless standards, providing low-power wireless connectivity options for IoT devices.

- Memory: It has up to 1MB of Flash memory and 128KB of SRAM, which allows for storage of large program code and data.

- Peripherals: The microcontroller features a wide range of peripherals, including timers, ADCs, DACs, communication interfaces (SPI, I2C, UART, etc.), and a variety of other interfaces (USB, I2S, etc.).

- Power management: It has a low-power consumption that allows for extended battery life in portable devices, and it has also a variety of low-power modes that can be used to conserve power when the device is not in use.

- Development support: The STM32WB55 is supported by a wide range of development tools, including the STM32CubeMX code generation tool, the STM32CubeWB software package, and various third-party development environments such as IAR, Keil, and GCC.

- Security: The microcontroller has built-in security features such as a secure bootloader and a hardware-based security mechanism (AES-256 and TRNG) to protect against external attacks and unauthorized access.

Porting TensorFlow Lite (TFLite) to STM32 microcontrollers involves several steps, including:

- Building TFLite for the ARM Cortex-M architecture used by STM32 microcontrollers.

- Porting TFLite's dependencies, such as the flatbuffers library, to the STM32 platform.

- Adapting TFLite's C++ code to run on the STM32 platform, including any necessary changes to memory management and threading.

- Testing the ported TFLite on STM32 microcontroller

- It's important to note that TensorFlow Lite is not a fully self-contained library, it still relies on a number of other libraries to run, such as the FlatBuffers library, and may also require additional libraries or tools depending on the specific STM32 microcontroller and development environment being used.

To use TensorFlow Lite (TFLite) with STM32CubeIDE, you will need to follow these general steps:

- Install STM32CubeIDE: You can download and install STM32CubeIDE from the STMicroelectronics website.

- Create a new project: Once you have STM32CubeIDE installed, you can create a new project by selecting "File" -> "New" -> "STM32 Project" from the menu.

- Import TFLite: You can import the TFLite library into your project by going to "Project Explorer" -> "Project" -> "Properties" -> "C/C++ Build" -> "Settings" -> "MCU GCC Linker" -> "Libraries". Here you can add the TFLite library by clicking on "Add" button, then "Library" and then browsing to the TFLite library location on your computer

- Configure the project settings: In the project settings, you will need to configure the memory settings, include paths, and other settings to match your target device and the TFLite library.

- Add TFLite code to your project: Once the TFLite library has been imported, you can add TFLite code to your project by adding the necessary TFLite headers and source files to your project.

- Build and run: Once you have added the TFLite code to your project, you can build and run the project on your target device.

- Test: You can test your TFLite model on the STM32 microcontroller by running the test code on the device.

It's important to note that TensorFlow Lite can run on a variety of platforms, including Android, iOS, Linux, and microcontrollers, making it easy to deploy machine learning models on a wide range of devices. But, to use it in STM32 microcontroller, you need to port it to the ARM Cortex-M architecture and adapt the C++ code to run on the STM32 platform.

README link below describes the procedure for Porting the TFLite from scratch.

https://github.com/elementzonline/tflite-micro-nucleo-wb55rg-examples/blob/main/README.md

The Hello1 example can be opened on STMCubeIDE to get started quickly.

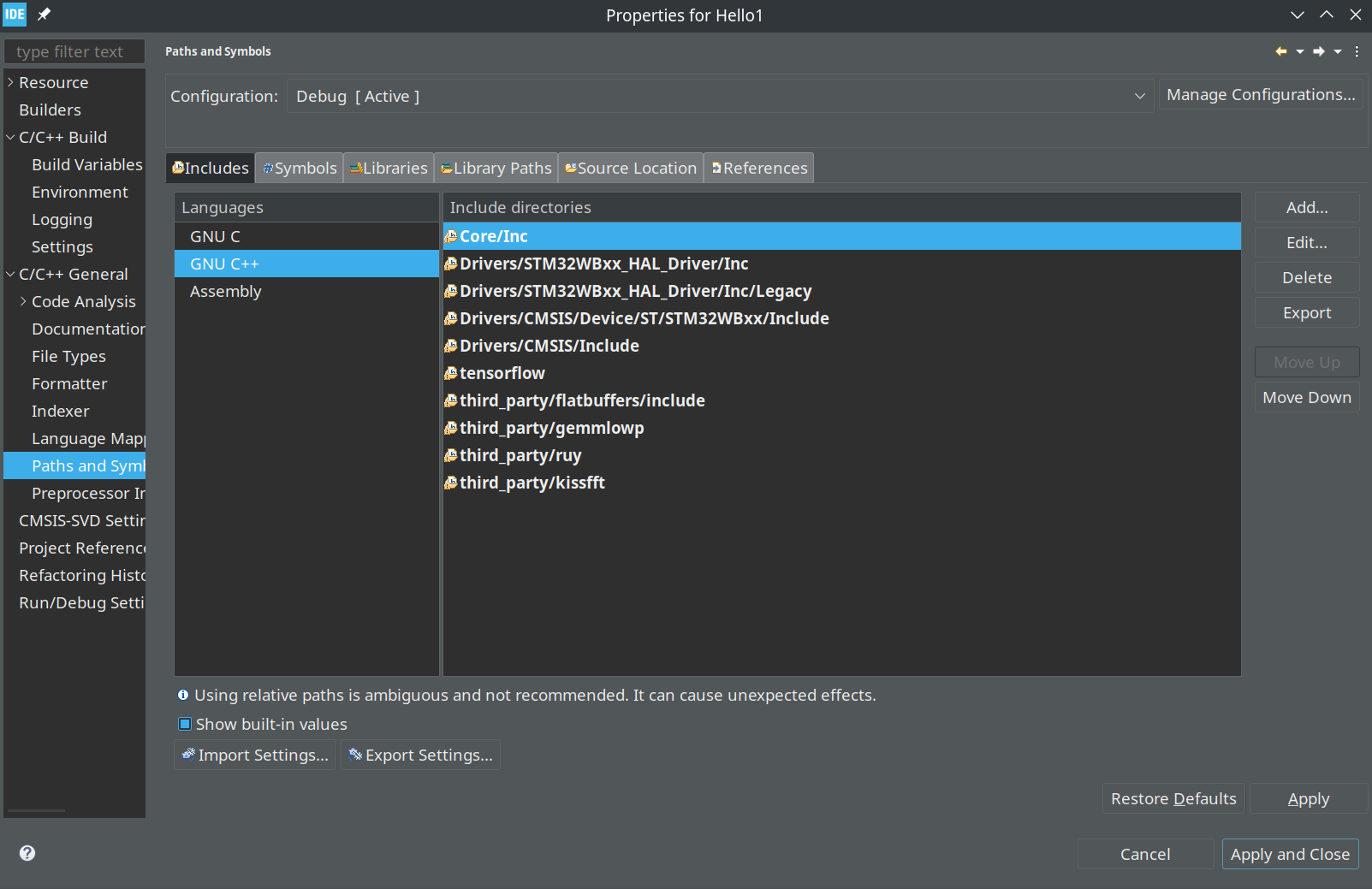

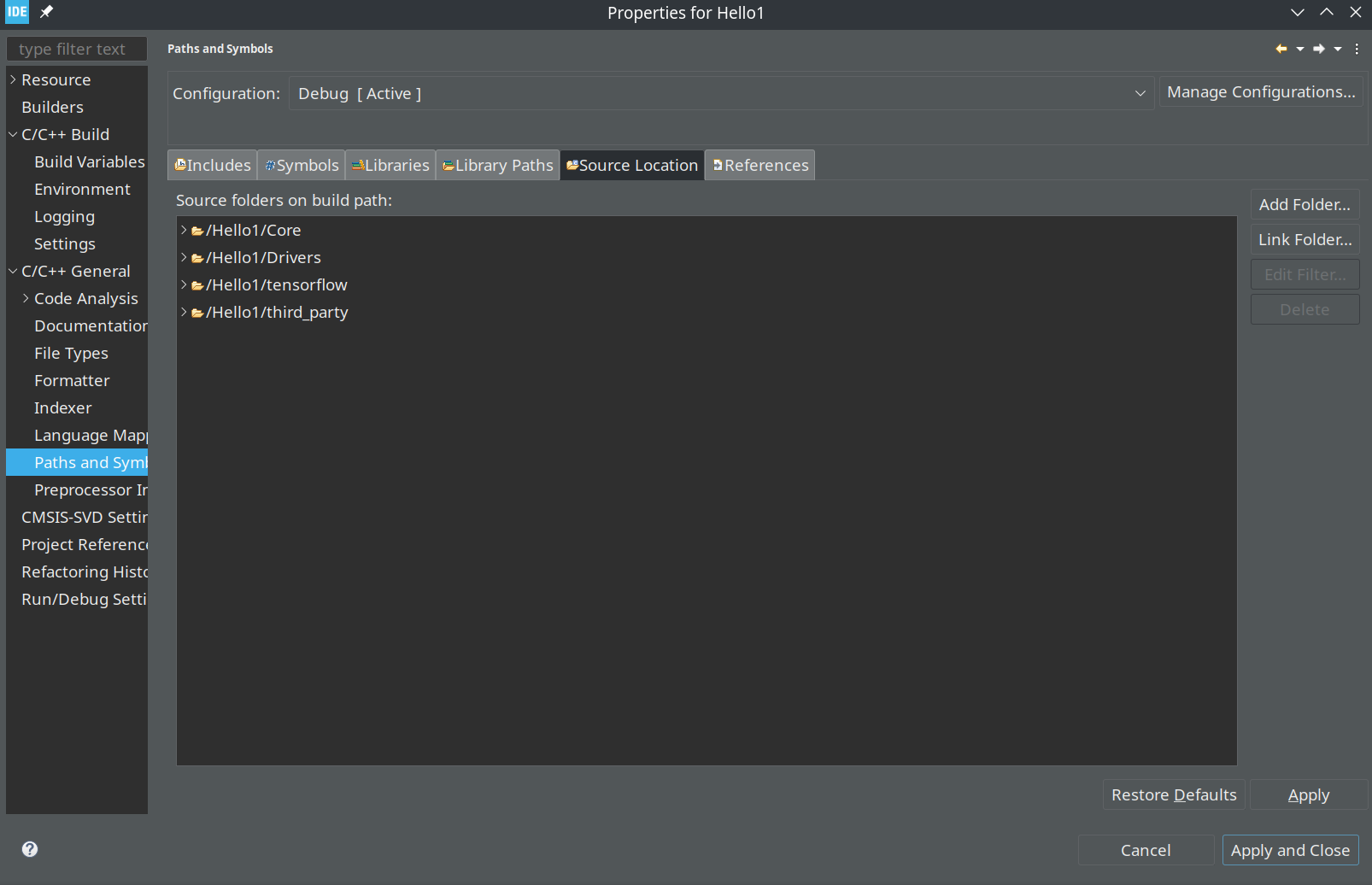

The essential project settings for running the examples are shown below

1. Add the TF include directories

Include the source locations

For the Hello1 example, the STLink serial console ( 115200 baud rate, 8N1 configuration) will show the input and corresponding output predicted which is trained using a Sin wave input-output combination.

"This blog post was crafted by the brilliant mind of ChatGPT, but let's be real, the screenshots were definitely taken by someone way cooler - me!"